How bad would human extinction be?

This post is part of Rethink Priorities’ Worldview Investigations Team’s CURVE Sequence: “Causes and Uncertainty: Rethinking Value in Expectation.” The aim of this sequence is twofold: first, to consider alternatives to expected value maximization for cause prioritization; second, to evaluate the claim that a commitment to expected value maximization robustly supports the conclusion that we ought to prioritize existential risk mitigation over all else.

Executive Summary

Background

- This report builds on the model originally introduced by Toby Ord on how to estimate the value of existential risk mitigation.

- The previous framework has several limitations, including:

- The inability to model anything requiring shorter time units than centuries, like AI timelines.

- A very limited range of scenarios considered. In the previous model, risk and value growth can take different forms, and each combination represents one scenario

- No explicit treatment of persistence –– how long the mitigation efforts’ effects last for ––as a variable of interest.

- No easy way to visualise and compare the differences between different possible scenarios.

- No mathematical discussion of the convergence of the cumulative value of existential risk mitigation, as time goes to infinity, for all of the main scenarios.

- This report addresses the limitations above by enriching the base model and relaxing its key stylised assumptions.

What this report offers

- There are many possible risk structure and value trajectory combinations. This report explicitly considers 20 scenarios.

- The report examines several plausible scenarios that were absent from the existing literature on the model, like:

- decreasing risk (in particular exponentially decreasing) and Great Filters risk.

- cubic and logistic value growth; both of which are widely used in adjacent literatures, so the report makes progress in consolidating the model with those approaches.

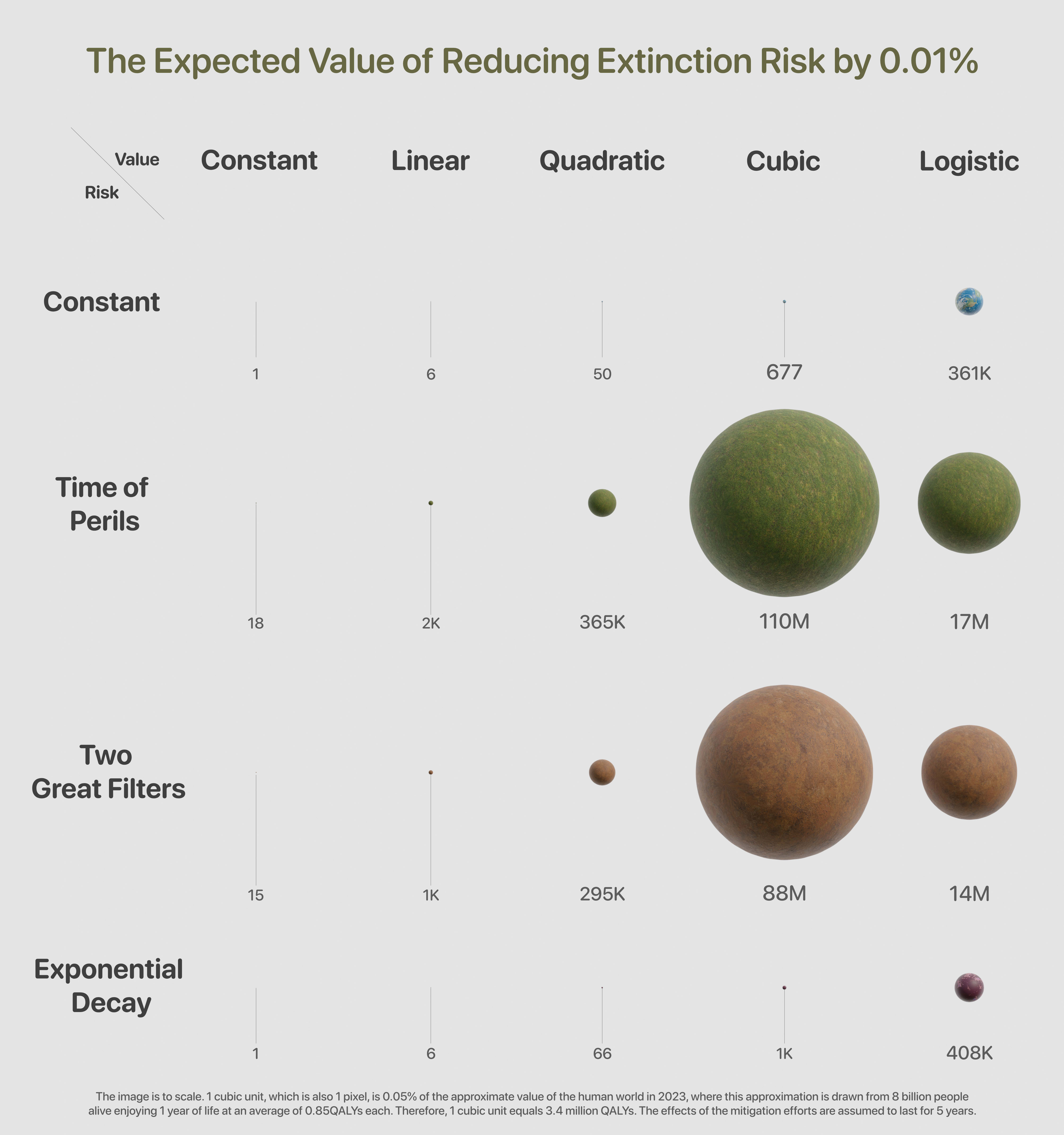

- It offers key visual comparisons and illustrations of how risk mitigation efforts differ in value, like Figure 1 below.

- The report is accompanied by an interactive Jupyter Notebook and a generalised mathematical framework that can, with minor input by the user, cope with any arbitrary value trajectory and risk profile they wish to investigate.

- This acts as a uniquely versatile tool that can calculate and graph the expected value of risk mitigation.

- The user can also adjust all the parameters in the 20 default scenarios.

Takeaways

- In all 20 scenarios, the cumulative value of mitigation efforts converges to a finite number, as the time horizon goes to infinity.

- This implies that it is not devoid of meaning to talk about the amount of long-term value obtained from mitigating risk, even in an infinitely long universe.

- In this context, even if we assign any minuscule credence to one of the scenarios, it won't overshadow the collective view.

- It helps clarify what assumptions would be required for infinite value.

- The report introduces the Great Filters Hypothesis:

- It states that humanity will face a number of great filters, during which existential risk will be unusually high.

- This hypothesis is a more general, and thus more plausible, version of what is commonly discussed under the name ‘Time of Perils’: the one filter case.

- Persistence – the risk mitigation’s duration – plays a key role in our estimates, suggesting that work to investigate this role further, and to obtain better empirical estimates of different interventions’ persistence would be highly impactful. Other tentative lessons:

- Interventions to increase persistence exhibit diminishing returns, and are most valuable for mitigation efforts exhibiting small persistence.

- Great value requires relatively high persistence, and the latter could be implausible.

- It is often assumed that, when considering long-term impact, existential risk mitigation is, in expectation, enormously valuable relative to other altruistic opportunities. There are a number of ways that could prove to be false. One possibility, which this report emphasises, is that the vast value of risk mitigation is only found in certain scenarios, each of which makes a whole host of assumptions.

The expected value of risk mitigation therefore strongly depends on our beliefs about these assumptions. And, depending on how we decide to aggregate our credences and which scenarios we allow for, astronomical value might be off the table after all.

Figure 1 (see header's image): This is a visual representation of the estimated expected value of reducing existential risk by 0.01%. The image is to scale and one cubic unit is the size of the world under constant risk and constant value, the top-left scenario.

This abridged technical report is accompanied by an interactive Jupyter Notebook. The full report is available here.